AWS Project Libra

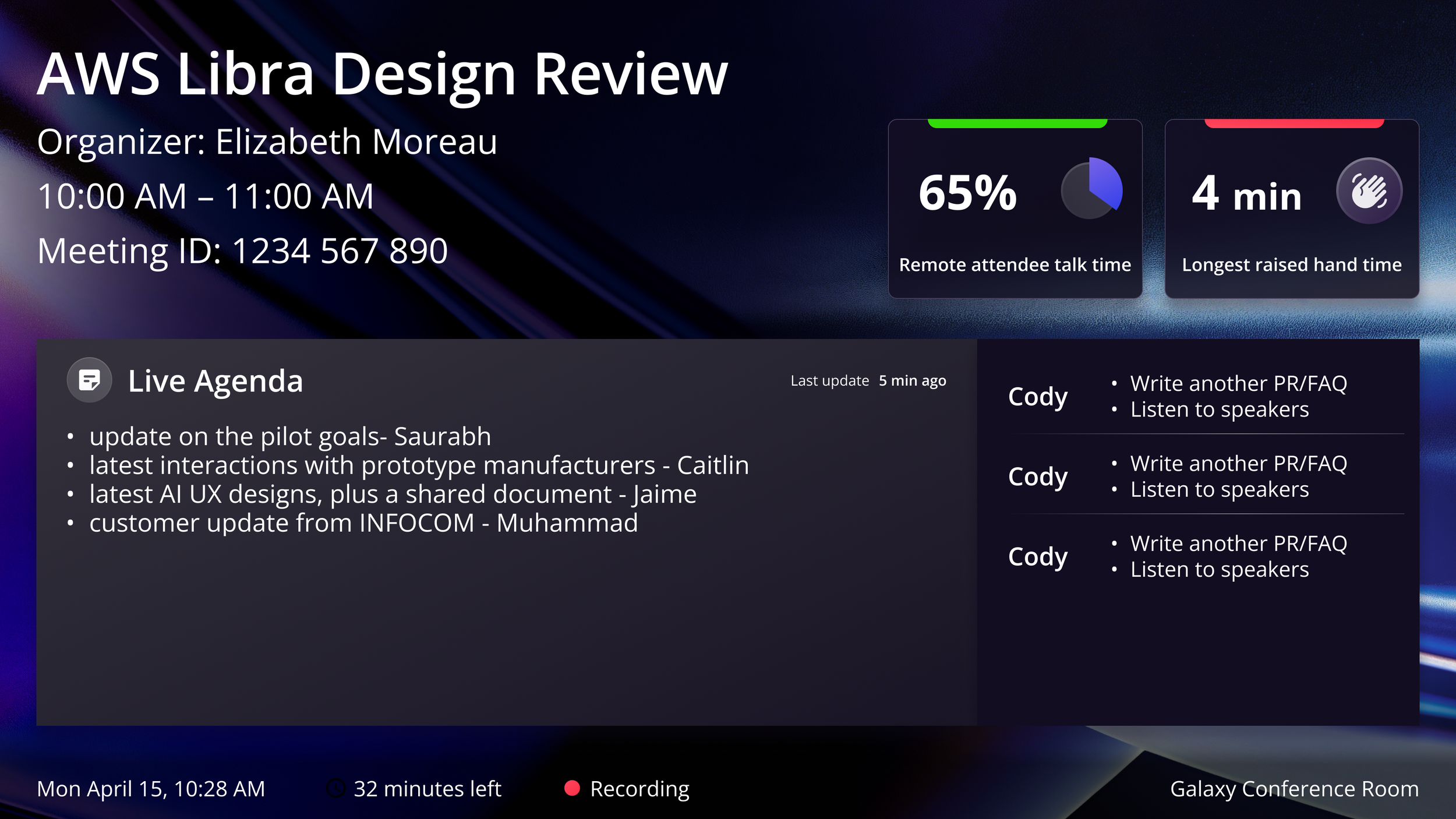

Libra is an AWS-developed audio-visual/video conferencing (AV/VC) system designed to bridge the gap between remote and in-person participants. As a UX Designer on the team, I responsible for design decisions impacting how participants join the meeting, experience during the meeting, and how they leave the room.

Role: Product Designer

Scope: End-to-end experience across in-room + remote participation

Timeline: 3 years

Research conducted: 30+ validation studies, 4 accessibility sessions, 2 large-scale surveys (N=491)

The Problem We Are Solving

When I joined the AWS hybrid meeting system team, the brief was clear: "Make the hybrid meeting experience better."

After shadowing 15 team meetings in the first month, I noticed remote participants could see and hear fine. But they couldn't participate fine.

In a 10-person meeting with 7 in-room and 3 remote:

In-room participants made eye contact, read body language, and jumped into conversations fluidly

Remote participants watched a static wide-angle shot of the room, waited for awkward pauses, and were frequently talked over

The meeting effectively ran as if the remote people weren't there

Remote vs. In-room satisfaction: Initial pilot showed remote participants loved individual tiles (+40 NPS) while in-room participants felt "watched" and distracted (-20 NPS). We couldn't make both groups happy with the same approach.

Equity vs. Adoption: Individual cameras solved equity but created in-room discomfort. If we removed cameras to improve comfort, we'd lose the equity we were trying to create.

AI adoption vs. Privacy concerns: Meeting Intelligence survey (N=129) showed 83.9% wanted AI summaries, but 13 users explicitly rejected engagement analysis as "frightening" and "invasive." We had to use AI to reduce work without triggering surveillance concerns.

The three tensions we had to resolve:

Decision Approach

Given the compressed timeline, prioritization became critical.

I focused on delivering a grounded experience rather than exploring speculative AI behaviors — emphasizing clarity, relevance, and participant control.

This meant intentionally rejecting several ideas that introduced unnecessary automation or surfaced information without strong context.

Collaboration

Partnered closely with PM and engineering to define a feasible scope for the demo, while coordinating with third-party teams to understand platform constraints early.

In parallel, conducted targeted user conversations to validate what level of AI intervention felt supportive rather than intrusive.

The Outcome

Demoed and shipped AI-meeting agent after 2 moths. Trust remained high—beta program NPS stayed above 85.

One Deep Dive -

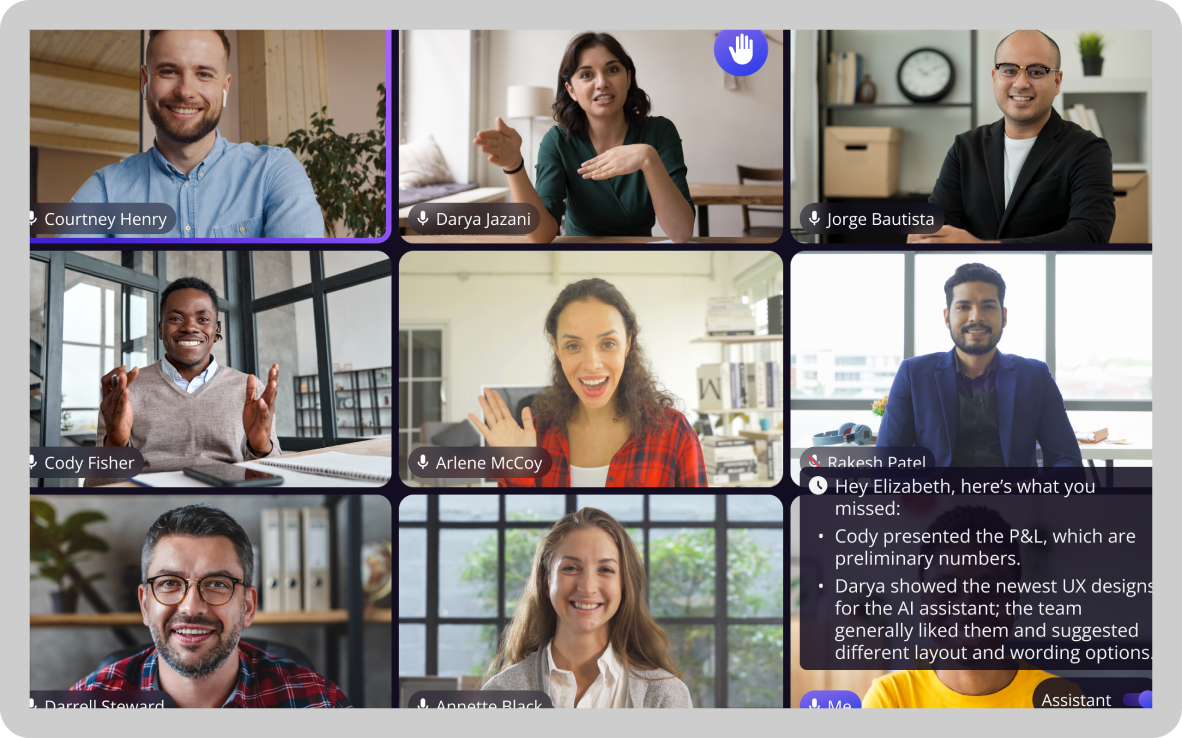

Designing the AI meeting agent

With only two months to deliver a working demo, the challenge was not just introducing AI into meetings — but determining when it should speak, and when it should stay silent.

Business pressure: This was 2023. Every competitor was shipping AI engagement features. Leadership asked why we weren't leveraging ML capabilities.

Key Constraints

This work required navigating several system and product constraints simultaneously:

Designing within an existing component library to ensure speed and consistency

Integrating summaries from third-party platforms such as Zoom

Connecting the experience to user identity and authorization models

Filtering non-essential information to prevent cognitive overload

Designing with neurodiverse participants in mind, where excessive prompts can create friction

Impact & Deliverables

Business Outcomes:

477+ meetings with 3,151+ in-room participants across global AWS offices over 3.5 years

Increased SCAT scores by 13% (System Customer Acceptance Testing—measures customer willingness to recommend in procurement decisions), representing approximately $2.8M in influenced sales pipeline

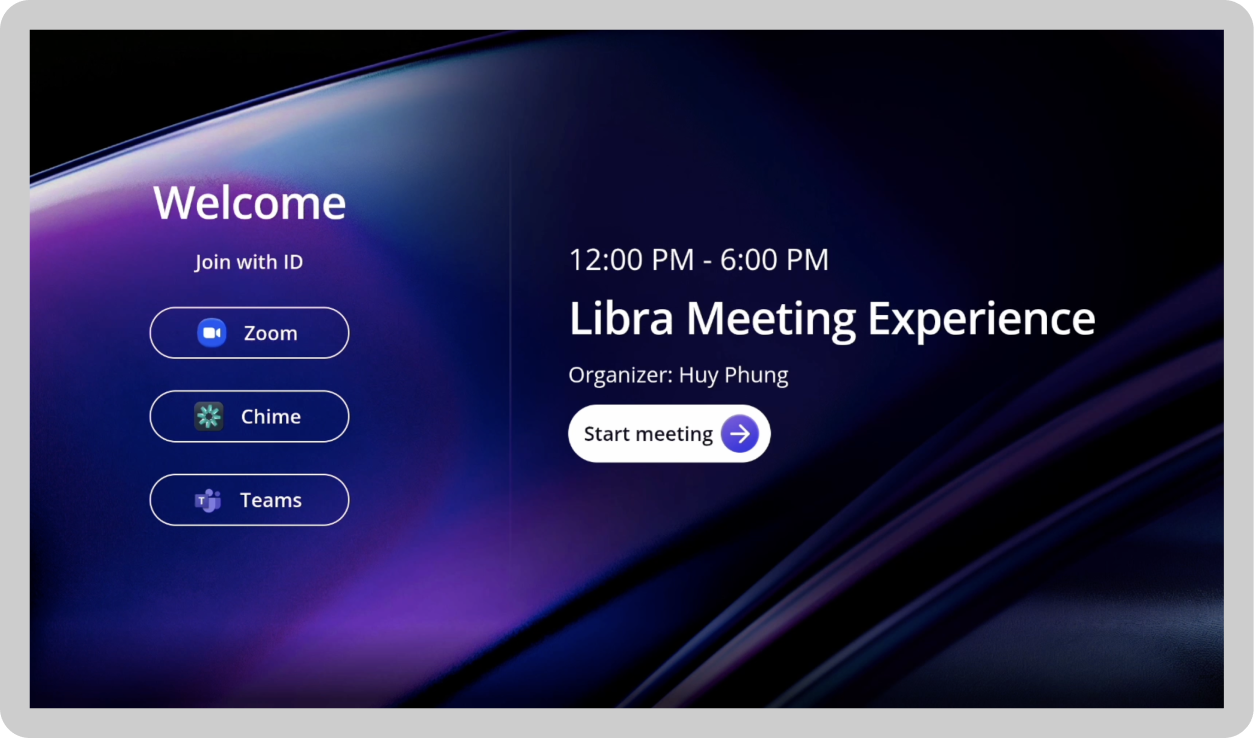

Solved third-party meeting integration challenges across Zoom, Teams, and Chime. Key problem: different ID formats

caused connection failures. Designed pre-validation UX that caught errors early and guided users to correct format.

VP endorsement: "This is better than our OP1 executive rooms—can we deploy in all leadership spaces?"

Design Decisions:

Led product strategy that convinced leadership to bet against industry convention (individual cameras vs. single wide-angle camera)

Made three critical product decisions that saved adoption: removed self-view by default (60% reduction in "feeling watched" complaints), killed 16-tile grid feature despite customer requests, build the AI-meeting agent.

Established primary/secondary display model that reduced cognitive load, 100% of people with disabilities in our studies preferred Libra over traditional conference rooms

Conducted and synthesized 40+ in-person usability tests and monthly user research to identify issues and drive rapid iteration

Accessibility Impact:

Led accessibility audit and ensured Born Accessible compliance from day one, not as afterthought

Designed for multiple disability types including neurodiverse users (ADHD, autism), low vision, deaf/hard of hearing, and physical disabilities

Validated that 100% of accessibility users preferred Libra over traditional conference rooms

Designed customizable captions, content zoom/pinch, and consistent information placement that became core product features

Ran accessibility workshops and training sessions to educate engineers on accessibility knowledge and design principles.

Design system and foundations:

Scaled the enterprise design system (400+ responsive components) across Web, Mobile, and TV surfaces.

Reduced engineering implementation time and ensured WCAG accessibility compliance across the suite.

Created primary display (tablet) for active content and secondary display (TV) for passive reference—reducing split attention that particularly impacted neurodiverse users