Inclusive hybrid meeting system bridging remote and in-person participants — AI meeting agent, join flow redesign, enterprise design system.

When I joined the AWS hybrid meeting system team, the brief was clear: "Make the hybrid meeting experience better."

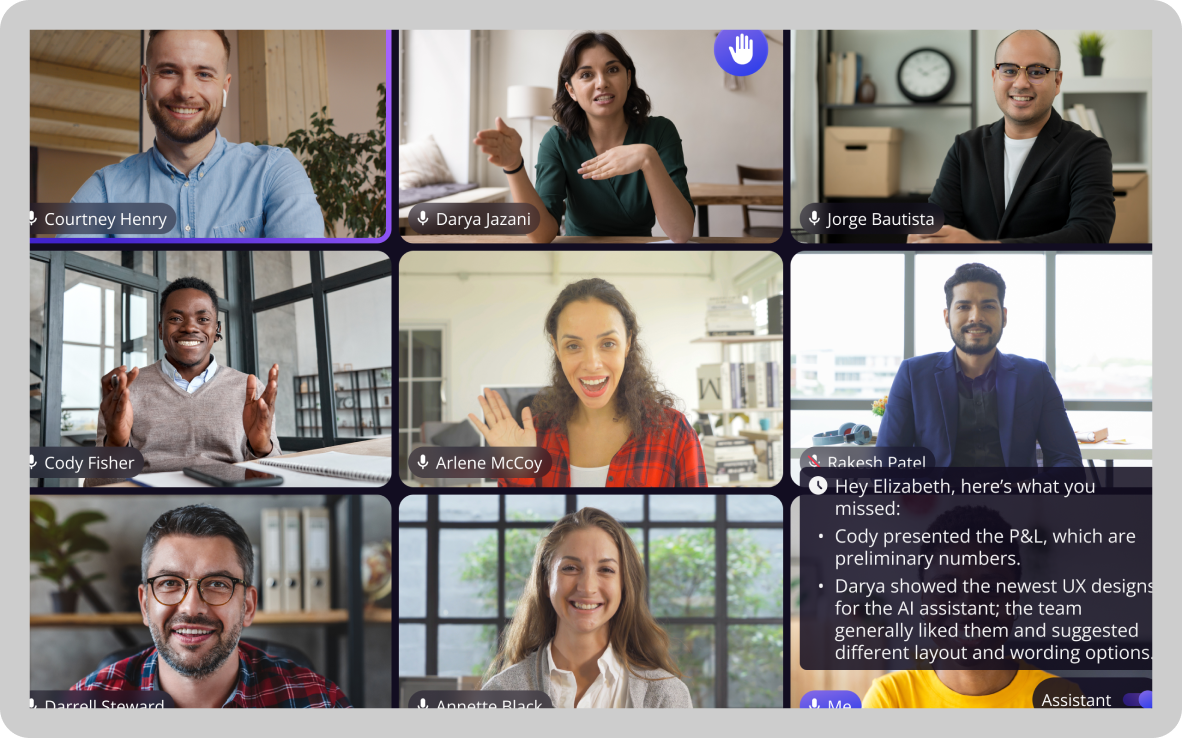

After shadowing 15 team meetings in the first month, I noticed remote participants could see and hear fine — but they couldn't participate fine. In a 10-person meeting with 7 in-room and 3 remote: in-room participants made eye contact, read body language, and jumped into conversations fluidly. Remote participants watched a static wide-angle shot of the room, waited for awkward pauses, and were frequently talked over. The meeting effectively ran as if the remote people weren't there.

The three tensions we had to resolve: Remote vs. in-room satisfaction (individual tiles gave remote +40 NPS but in-room -20 NPS). Equity vs. adoption (individual cameras solved equity but created in-room discomfort). AI adoption vs. privacy concerns (83.9% wanted AI summaries, but 13% rejected engagement analysis as "frightening").

With only two months to deliver a working demo, the challenge was not just introducing AI into meetings — but determining when it should speak, and when it should stay silent.

Business pressure: this was 2023. Every competitor was shipping AI engagement features. Leadership asked why we weren't leveraging ML capabilities.

Given the compressed timeline, I focused on delivering a grounded experience rather than exploring speculative AI behaviors — emphasizing clarity, relevance, and participant control. This meant intentionally rejecting several ideas that introduced unnecessary automation or surfaced information without strong context.

I partnered closely with PM and engineering to define feasible demo scope, while coordinating with third-party teams to understand platform constraints early. In parallel, I conducted targeted user conversations to validate what level of AI intervention felt supportive rather than intrusive.

The outcome: Demoed and shipped the AI meeting agent in 2 months. Trust remained high — beta program NPS stayed above 85. VP endorsement: "This is better than our OP1 executive rooms, can we deploy in all leadership spaces?"

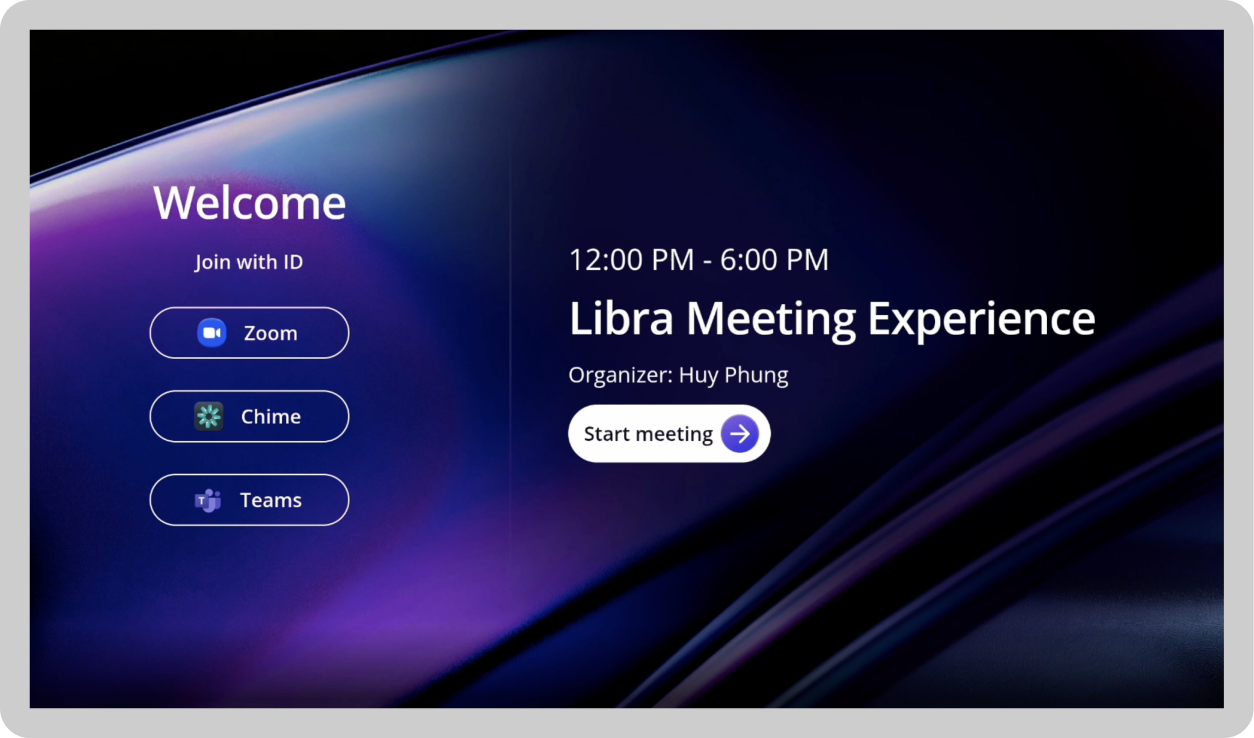

Scaled the enterprise design system to 400+ responsive components across Web, Mobile, and TV surfaces. Reduced engineering implementation time and ensured WCAG compliance across the suite. Created primary display (tablet) for active content and secondary display (TV) for passive reference — reducing split attention that particularly impacted neurodiverse users.